Saturday, December 31, 2011

Port For SQL Server - What is default...

Friday, December 30, 2011

SQL Script to find Expected Finish TI...

TOP SQL Server Posts for 2011 - This ...

Thursday, December 29, 2011

SQL Server Licensing ? Core Based Lic...

SQL Server Injection - What is SQL In...

Wednesday, December 28, 2011

SQL WHERE ? Video Tutorial - Prior to...

How to Backup SQL?Video Tutorial - HO...

Installing SQL Server 2008 R2 SP1 - I...

Tuesday, December 27, 2011

How to Export SQL Profiler / Trace to...

SQL Server Version / Builds List Comp...

Monday, December 26, 2011

SQL Server Management Studio (SSMS) i...

SQL Server Express with Reporting Ser...

Sunday, December 25, 2011

Installing SQL Server Express Edition...

Saturday, December 24, 2011

Installing & Configuring SQL Server Reporting Services on Cluster Environment for High Availibility

I was working on project, where there is only a one cluster box which is being used for Database Services to meet high availability requirements.

Customer, understood some reporting Services feature so asked developers to build some required reports, which was being done. Now things need to be pushed to production so a requirement for installing and configuring the Reporting Services in a Clustered environment to meet the high availability requirements.

As per the customer, things were pretty straight forward as he assumed that Reporting Services are also cluster aware as SQL Server Database engine. So customer asked me to asked a install reporting Services on the clustered environment to meet the high availability, one of major business requirement.

When I shared the activities / project plan to customer, he was shocked to see one of the design slide, which I pasted below, he never thought that there will be a Scale OUT solution to meet the high availability requirement.

Customer was not aware that “Reporting Services is not cluster-aware”, YES SQL Server Reporting Services are not cluster aware.

So let me summaries the entire activity / project with detailed description to install and configure reporting services on a clustered environment to meet high availability requirement.

In short a reporting services should be available as one name / url for user to access report server, regardless of fact on which node it is running, a kind of virtual url for report server

Broad Steps / Activites

- Install first Reporting Services Instance on NODE 1 of clustered environment, choose Install but do not configure server option during Report Server Installation Options page

- Configure Reporting Services using Reporting Services Configuration tool

- Report Server Web service URL,

- Report Manager URL,

- Report Server database

- Report server temp database etc.

- Install second Reporting Services Instance on NODE 2 of clustered environment, choose Install but do not configure server option during Report Server Installation Options page.

- Configure Reporting Services using Reporting Services Configuration tool and Connect report server to the same database you used for the first report server instance. (Instead of creating a new database, opt for Choose an existing report server database)

- Configure the Report Server Web service URL, which would be definitely a different than the first as this would be set up on NODE 2

- Join second report server instance to the scale-out deployment.

- Open the Reporting Services Configuration tool, and reconnect to the first report server instance which is running on NODE 1 and click on Scale-out Deployment to open the Scale-out Deployment page.

- You should see two entries, one for each report server instance that is connected to the report server database. The first report server instance should be joined. The second report server should display a status of "Waiting to join". If you do not see similar entries for your deployment, verify you are connected to the first report server that is already configured and initialized to use the report server database.

- On the Scale-out Deployment page, select the report server instance that is waiting to join the deployment, and click Add Server.

- Till now we have configured the Reporting Services Scale out solution and both individual reporting services instances access common reporting services database. As this point, we should be verify that both reporting services instances are working fine.

- in my case, was able to verify the first instance with url “http://NODE1/reportserver” and

“http://NODE2/reportserver”, second reporting instance - Now I need to do a configuration that a single url can be used to access services from the both url.

- in my case, was able to verify the first instance with url “http://NODE1/reportserver” and

- Configure view state validation by editing the Web.config file for Report Manager for both report server instance.

- Generate a validation key. You can use the autogenerate functionality provided by the .NET Framework or you can create the key some other way. Do not use the AutoGenerate option when setting the validationKey attribute.

- Generate a decryption key. For the decryption key, you can create an explicit value or set decryptionKey to AutoGenerate

- Open the Web.config file for Report Manager and set the <machineKey> element. You must specify the validation key, decryption key, and the type of encryption used for validation of data. Example <machineKey validationKey="43ajhk3ebc97uj826a7b3v37k903a9d5dy65" decryptionKey="86165h77a9d588a9" validation="SHA1"/>

- Configure a virtual Server name to access reporting Services. We need to do this for

- Report Manager (configuration file RSWebApplication.config), set the <ReportServerUrl> to the virtual server name and remove the entry for <ReportServerVirtualDirectory>. This step ensures that all requests coming through Report Manager are load-balanced to the report servers that are running in the scale-out deployment. The following example illustrates the syntax you should use; it specifies the virtual server name and report server virtual directory: example

<ReportServerUrl>https://MyReportPortal/reportserver</ReportServerUrl>

<ReportServerVirtualDirectory></ReportServerVirtualDirectory>

- Report Server Web service (configuration file RSReportServer.config), Set the <UrlRoot> to the virtual server address. This step ensures that all hyperlinks in reports point back to the scale-out deployment and are load-balanced accordingly. This setting is also used to complete report delivery. The following example illustrates the syntax you should use: example

<ReportServerUrl>https://MyReportPortal/reportserver</ReportServerUrl>

- This files can be found on each node at C:\Program Files\Microsoft SQL Server\MSRS10_50.MSSQLSERVER\Reporting Services\ReportServer

- Report Manager (configuration file RSWebApplication.config), set the <ReportServerUrl> to the virtual server name and remove the entry for <ReportServerVirtualDirectory>. This step ensures that all requests coming through Report Manager are load-balanced to the report servers that are running in the scale-out deployment. The following example illustrates the syntax you should use; it specifies the virtual server name and report server virtual directory: example

- Now ask your network administrator to create a new DNS record for this new virtual url (report server url) and point that to IP addresses of cluster nodes, so that if client request for that virtual url, the request should reach to any of the node on the cluster and also request if can can built a system to verify the availability of all associated IP addresses, if none of them in not available then do the resolution only with available IP addresses.

- Once that is being done, you are good to go.

So as part solution your reporting services will be running of both the nodes simultaneously, in case a any one node fail, reporting services will still be available on second node, and will be accessible to users.

It is recommended to use Load balancer, or web farm to build this solution, but none of the option was available in my case, so I worked with this workaround.

Merry Christmas !!! - Bells are ringi...

Merry Christmas !!!

Bells are ringing and everyone is singing,

It's Christmas! It's Christmas!

Wishing you a Merry Christmas and a Happy New Year.

Wednesday, December 7, 2011

How to Give Read Definition Permissio...

Tuesday, December 6, 2011

SQL Server 2012 Editions Licensing Co...

Wednesday, November 30, 2011

Install SQL Server 2008 R2 ?Step by S...

Wednesday, November 16, 2011

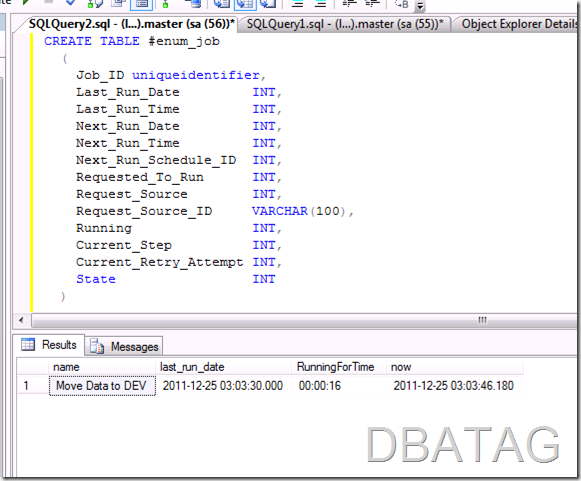

SQL Script to find Current Running Jobs and elapsed time

I do get a call from developers to check weather a any job is running on the server or not ? Mostly on Sunday morning they want to check that all data import / exports jobs finishes on time. So they wanted me to know what all the jobs are currently executing.

We can find the execution status from the Job Activity Monitor but we don't get the duration of execution in it. we can use the below query to find out the same. The Query fetches the Running jobs and calculates the time for which they are executing.

SQL Script to find current Running Jobs

CREATE TABLE #enum_job

(

Job_ID uniqueidentifier,

Last_Run_Date INT,

Last_Run_Time INT,

Next_Run_Date INT,

Next_Run_Time INT,

Next_Run_Schedule_ID INT,

Requested_To_Run INT,

Request_Source INT,

Request_Source_ID VARCHAR(100),

Running INT,

Current_Step INT,

Current_Retry_Attempt INT,

State INT

)

INSERT INTO

#enum_job EXEC master.dbo.xp_sqlagent_enum_jobs 1, garbage

SELECT

R.name ,

R.last_run_date,

R.RunningForTime,

GETDATE()AS now

FROM

#enum_job a

INNER JOIN

(

SELECT

j.name,

J.JOB_ID,

ja.run_requested_date AS last_run_date,

(DATEDIFF(mi,ja.run_requested_date,GETDATE())) AS RunningFor,

CASE LEN(CONVERT(VARCHAR(5),DATEDIFF(MI,JA.RUN_REQUESTED_DATE,GETDATE())/60))

WHEN 1 THEN '0' + CONVERT(VARCHAR(5),DATEDIFF(mi,ja.run_requested_date,GETDATE())/60)

ELSE CONVERT(VARCHAR(5),DATEDIFF(mi,ja.run_requested_date,GETDATE())/60)

END

+ ':' +

CASE LEN(CONVERT(VARCHAR(5),(DATEDIFF(MI,JA.RUN_REQUESTED_DATE,GETDATE())%60)))

WHEN 1 THEN '0'+CONVERT(VARCHAR(5),(DATEDIFF(mi,ja.run_requested_date,GETDATE())%60))

ELSE CONVERT(VARCHAR(5),(DATEDIFF(mi,ja.run_requested_date,GETDATE())%60))

END

+ ':' +

CASE LEN(CONVERT(VARCHAR(5),(DATEDIFF(SS,JA.RUN_REQUESTED_DATE,GETDATE())%60)))

WHEN 1 THEN '0'+CONVERT(VARCHAR(5),(DATEDIFF(ss,ja.run_requested_date,GETDATE())%60))

ELSE CONVERT(VARCHAR(5),(DATEDIFF(ss,ja.run_requested_date,GETDATE())%60))

END AS RunningForTime

FROM

msdb.dbo.sysjobactivity AS ja

LEFT OUTER JOIN msdb.dbo.sysjobhistory AS jh

ON

ja.job_history_id = jh.instance_id

INNER JOIN msdb.dbo.sysjobs_view AS j

ON

ja.job_id = j.job_id

WHERE

(

ja.session_id =

(

SELECT

MAX(session_id) AS EXPR1

FROM

msdb.dbo.sysjobactivity

)

)

)

R ON R.job_id = a.Job_Id

AND a.Running = 1

DROP TABLE #enum_job

Script OUTPUT

Thursday, October 27, 2011

Fireside Chat with SQL Server Enginee...

Thursday, October 13, 2011

SQL Server 2012 - SQL Server 2012 Th...

Wednesday, September 28, 2011

What?s new in PowerPivot for Excel wi...

Monday, September 26, 2011

What?s New for Business Intelligence ...

Sunday, September 25, 2011

Sample SQL Server Developer Resume ? ...

What’s New for Business Intelligence in SQL Server Denali

Microsoft Next version of SQL Server, code name Denali, has lot more new features. Let's quickly list down all those features.

- Data Quality Services (DQS)

- Master Data Services v. 2.0

- Impact Analysis and Lineage

- Integration Services Improvements

- PowerPivot v. 2.0

- New Hierarchies and KPI's

- New Visual Diagram View

- New Measure Grid

- New Data Type Table for Time Intelligence

- User Defined Self Service Alerting

- BI Semantic Model (BISM)

- New Project Crescent

- Reporting Services Improvements

- rendering to .docx format and .xlsx formats

- compressed and sip files

Download SlideDesk for More information. Shortly I would be working on these items and will come out with more details.

Sample SQL Server Developer Resume - BI

< NAME>

PROFESSIONAL SUMMARY:

- Over 7 years of experience in analyzing, designing, tuning, and developing Client/Server, Business Intelligence (BI) database applications and various segments of Software Development Life Cycle (SDLC), using MS SQL Server 2008/2005/2000 DTS/SQL Server Integration, Reporting & Analysis Services.

- Extensive SQL Development experience in Banking, Insurance and Healthcare industries with a strong understanding of data & analytics.

- Hands on experience in installation, configuration, performance tuning, optimization, backup and recovery in SQL Server 2008/2005/2000.

- Experience in Upgrading of SQL Server 2000/2005 databases to SQL Server 2005/2008.

- Extensive experience in using T-SQL (DML, DDL) in SQL Server 2008/20005/2000 platforms.

- Experienced in creating Tables, Stored Procedures, Views, Indexes, Cursors, Triggers, User Profiles, User Defined Functions, Relational Database Models and Data Integrity in observing Business Rules.

- Expert Experience in Normalization, De-normalization.

- Extensive knowledge in tuning T-SQL (DDL and DML) queries to improve the database performance and availability.

- Broad experience in Query Optimization and performance tuning of stored procedures, functions etc.

- Experience in RDBMS Concepts, Database Management Systems, Database Physical and Logical design, Data Mapping, Table normalization, Data Modeling, Creating ER Diagrams using tools such as MS Visio.

- Experience in creation of Database authentication modes, configuring permissions and assigning roles to users.

- Skilled in Business Intelligence tools like SQL Server 2005 Integration Services (SSIS), Reporting Services (SSRS) and Analysis Services (SSAS).

- Proficient in using SQL Server Integration Services (SSIS) to build Data Integration and Workflow Solutions, Extract, Transform and Load (ETL) solutions for Data warehousing applications.

- Experience in creating SSIS packages to automate the Import and Export of data to and from SQL Server 2005 using SSIS tools like Import and Export Wizard, Package Installation and BIDS.

- Expertise in building and migration of various DTS packages.

- Experience in creating package configurations like XML, SQL Server Configurations and Logging using text, XML and Windows event log.

- Experience in creating jobs, SQL Mail, Alerts and schedule SSIS Packages using SQL Server Agent.

- Ability to define and develop Report Structure with data definition, report layout and report definition language.

- Extensive experience in creating several reports such as drill down reports, parameterized reports, linked reports, sub reports, Matrix Reports and Chart reports using SQL Server Reporting Services.

- Excellent in rendering reports, managing subscriptions, creating reports with report builder, report snapshot and report cache.

- Experience in scheduling and deployment of reports and uploading files to a report server catalog from the report manger.

- Experience in SharePoint & Performance Point Servers.

- Experience in SQL Server Analysis Services (SSAS) for designing OLAP Cubes.

- Hands on experience with Dimensional Modeling, ER Modeling, Star Schema / Snowflake Schema, FACT and Dimensional tables and Operational Data Store (ODS).

- Created and Configured Data Source & Data Source Views, Dimensions, Cubes, Measures, Partitions, KPI’s & MDX Queries using SQL Server 2005 Analysis Services.

- Experience in administrative tasks such as data loading, batch jobs, data unloading, Backup, Recovery and scheduling the backups.

- Experience in Application Development Analysis, Requirement Analysis, Scoping, Developing, Debugging, Testing and Documentation of various phases in a project life cycle of Client/Server Applications.

- Good knowledge of Computer Science theory & strong in modern programming languages.

- A well-organized, goal-oriented, highly motivated and effective team leader/member with excellent analytical, troubleshooting, and problem solving Skill.

- Excellent Verbal & Written Communication skills and strong in Documentation.

- Flexible, enthusiastic and project oriented team player with solid communication and leadership skills to develop creative solution for challenging client needs.

EDUCATION AND CERTIFICATIONS:

- Bachelor of Technology in Computer Science and Engineering

- Masters in Computer Science

- Cisco Certified Network Associate (CCNA)

- Microsoft SQL Server 2008 Maintenance and Implementation

TECHNICAL SKILLS:

- Databases: SQL Server 2000/2005/2008, MS Access 2000, MS Excel, Oracle 9i/10g, Mainframes, DB2

- Programming Languages: T-SQL, PL/SQL, C, C++, HTML, XML, Java

- Operating Systems: Windows 98/2000/2003/XP/NT/Vista, Windows 2000Advanced Server, Windows 2003 Enterprise Server, UNIX.

- IDE & BI Tools: SQL Server BI Development Studio (BIDS), SharePoint, MS Visual Studio 2008/2005

- Version Control: Team Foundation Server (TFS), Visual SourceSafe (VSS).

- ETL Tools: SSIS, Data Stage, DTS

- Data modeling Tools: Erwin 7.2/4.5/4.0, MS Visio 2003/2007, Rational Rose

- Design Methodology: Unified Modeling Language 1.1 using Rational Rose.

- Packages: Microsoft Office 2007/2003/2000

PROFESSIONAL EXPERIENCE:

| Mayo Clinic | March 2010 – July 2011 |

| SQL Server Developer/SSIS/SSRS/SSAS | Minneapolis, MN |

Mayo Clinic is a not-for-profit medical practice and medical research group. It specializes in hard-to-treat diseases, and is known for innovative and effective treatments for diseases that had gone undiagnosed or under treated in the same patients with other doctors. The project is a complete solution for the Clinic which includes modules like patient registration system, doctor scheduling, lab track system, discharge system billing system, and deposit system. It is a multi-user system with great search facility. It has a dynamic facility for adding the registration type for each patient. An advanced search facility has helped clients, patients and doctors to search their desired records easily.

Responsibilities:

- Designed, Implemented and maintained Database Schema, Entity relationship diagrams, Data modeling, Tables, Stored procedures, Functions and Triggers, Constraints, Indexes, Schemas, Functions, Views, Rules, Defaults and complex SQL statements.

- Maintained the database schema in source code control (MS Team Suite Database Edition preferred).

- Migrating DTS packages to DTSX using DTSX-change.

- Involved in XML Development and XML File Parsing in SSIS and SSRS.

- Developed the PL/SQL programs and Involved in optimize stored procedures and queries for faster retrieval and Server efficiency SSAS and SSIS.

- Created automated stored procedures for day end operations using SQL Server agent.

- Coordinated with front-end for implementing logic in stored procedures and functions.

- Performance tuning of SQL queries and stored procedures using SQL Profiler and Index Tuning Wizard in SSAS and SSIS.

- Implemented failure alerts using email in SSIS.

- Involved in Object Oriented Design (UML, activity diagrams, Use cases and Object Modeling).

- Created and used extended stored procedure in SSAS.

- Snapshot Replication was been used to synchronize the data between their Different Stores.

- Scheduled Database and Transactional log backups using the Database Maintenance Plan Wizard in SSIS.

- Involved in SQL Server Mirroring and Database optimization.

- Data migration (import & export – BCP) from Text to SQL Server. Used scripts and variables, email notifications for ETL process using Data Integrator in SSIS, SSAS.

- Created jobs, alerts to run SSAS, SSRS packages periodically. Created the automated processes for the activities such as database backup processes and SSAS, SSRS Packages run sequentially using SQL Server Agent job.

- Created and Designed Data Source and Data Source Views Using SQL Server Analysis Services 2008 (SSAS).

- Created and Configured OLAP Cubes (Star Schema and Snow flex Schema) using SQL Server 2008 Analysis Services (SSAS).

- Performance tuning MDX and dimensions design and scaling to large numbers of users in SQL Server Analysis Services (SSAS).

- Enhanced the functionality in database and data ware housing concepts (OLAP, OLTP) Cube by creating KPI, Actions and Perspective using SQL Server Analysis Services 2008(SSAS).

- Involved in Cube Partitioning, Refresh strategy and planning and Dimensional data modeling in Analysis Services (SSAS).

- Generated Reports using Global Variables, Expressions and Functions for the reports using SSRS 2008.

- Designed logical/physical data models and defined primary key, foreign key using Erwin tool

- Developed Query for generating drill down reports in SSRS 2008. Prepared AD HOC reports through report builders.

- Created Windows Logins and privileges to User Accounts/Groups and objects.

- Used DBCC commands to troubleshoot issues related to database consistency.

Environment: SQL Server 2008, Oracle, MS Access, Excel, Windows Server 2008, VSS, ERWIN, Microsoft Visual Studio, MS Visio, MS Integration, Analysis and Reporting Services, Management Studio.

| ProTech Associates | February 2008 – February 2010 |

| SQL Server Developer/SSIS/SSRS/SSAS | Columbia, MD |

ProTech Associates was founded in 1984 and since then has devoted its efforts exclusively to develop technology solutions for associations. It closely works with each association customer to gain a deep understanding of their organization to develop a solution that best suited their specific needs. The project mainly dealt with creating SSIS packages, building cubes on the OLAP systems, generate reports by extracting data from the cube and checking the performance tuning of stored procedures, troubleshooting and maintenance.

Responsibilities:

- Designed logical and physical database structure to facilitate analysis of data from both operational and customer perspectives.

- Extensively used Joins and sub-queries for complex queries involving multiple tables from different databases.

- Wrote Stored Procedures and triggers to capture inserted, deleted and updated data from OLTP systems.

- Created Triggers to enforce data and referential integrity.

- Generated complex stored procedures and functions for better performance and flexibility.

- Created indexes for the quick retrieval of the data from the database.

- Defined constraints, rules, indexes and views based on business requirements.

- Used Execution Plan, SQL Profiler and database engine tuning advisor to optimize queries and enhance the performance of databases.

- Created database maintenance planner for the performance of SQL Server, which covers Database integrity checks and re-indexing.

- Created SSIS Packages using Pivot Transformation, Fuzzy Lookup, Derived Columns, Condition Split, Term extraction, Aggregate, Execute SQL Task, Data Flow Task, and Execute Package Task etc. to generate underlying data for the reports and to export data from Excel Spreadsheets, Text file, MS Access and CSV files.

- Used DataDefractor tool to normalize semi-structured Spreadsheets, fed it into the SSIS pipeline in the form of normalized output of factual data and contextual metadata.

- Reduced the repetition of the particular group in the Excel sheet using the Vertical Sub-Pages and Horizontal Sub-Pages Layout features using DataDefractor Tool.

- Deployed the packages on staging and production.

- Created SSIS packages for data Importing, Cleansing and Parsing. Extracted, cleaned and validated data from XML file into database using XML source.

- Set SQL Server configurations for SSIS packages and configured logging including windows event log and XML logging.

- Created ETL packages using Heterogeneous data sources (SQL Server, ORACLE, Flat Files, Excel source files, XML files etc.) and then loaded the data into destination tables by performing different kinds of transformations using SSIS

- Developed the packages with monitoring features and logging so that audit information of the packages and their execution results are loaded in to the audit table.

- Used Event Handlers for Custom Logging using Complex Store Procedures for various events (On Warning, On Pre and Post Execution, On Task Failed etc.)

- Performed Performance Tuning by using unblocking Transformations and row transformations for better Performance of SSIS packages.

- Generated parameterized/Drilldown reports using SSRS and Crystal Reports.

- Used dynamic parameters in SSRS to enable/disable the parameters based on user selection

- Scheduled Daily and weekly Jobs and Alerting using SQL Server Agent.

- Fine-tuned SSAS cube.

- Regular responsibilities for updating staging and dimensional databases as well as rebuild the Dimensions and cubes in Analysis Services.

- Worked on large data warehouse Analysis services servers and developed the different reports for the analysis from that servers.

- Identified the dimension, fact tables dimension, fact tables and designed the data warehouse using star schemas

- Extensively involved in the SSAS storage and partitions, and aggregations, calculation of queries with MDX, Data Mining Models, developing reports using MDX and SQL.

- Used Script Logic Script Logic Quest Lite Speed dramatically to reduce storage costs by quickly compressing data up to 95%.

- Migration of an MS Access application to SQL Server 2000.

- Created named query in SSAS Data Source View to get appropriate hierarchical summarize data for two dimensions.

- Constructed Cubes based on data volumes mostly adhering to Star Schema.

- Created Perspectives of the cube to simplify the process of browsing for the end users.

- Used MDX to create calculated members in the cube.

- · Used to support the data processing engine in database intensive operations like query

- Responsible for creating the reports based on the requirements using SSRS 2008.

- Created Drill-through, Drill-down, Linked Reports and Sub-Report using RDL.

- Generated periodic reports based on the statistical analysis of the data using SQL Server Reporting Services (SSRS).

- Built effective queries for high-performance reporting and rendered them to HTML, XML, PDF and Excel formats using Reporting Services (SSRS).

- Generated various Report models on Views (due to security issues) and deployed them on to the production reporting server and generated various Report models and ad-hoc reports.

- Created queries for generating drill down reports in SSRS 2008.

- Formatted the reports using the Global variables, expressions and Functions.

Environment: MS SQL Server 2008 R2, TSQL, SQL Integration Services (SSIS), SQL Reporting Services (SSRS), Data Warehousing(SSAS), MSIIS, MDX, SAP BPC,MS Access 2003/2005, VB.NET, C#, SQL Profiler.

| VHA (Value Health America) | August 2006 – January 2008 |

| SQL Server Developer/SSIS/SSRS | Irving, TX |

Value Health America (VHA) is a nationwide network of community-owned health care system and their physicians. The system here includes modules like patient registration system, doctor scheduling, lab track system, discharge system billing system, and deposit system. It is a multi-user system with great search facility. It has a dynamic facility for adding the registration type for each patient. An advanced search facility has helped clients, patients and doctors to search their desired records easily.

Responsibilities:

- Responsible for Data Modeling based on the client requirements.

- Prepared the Technical and Function specifications based on the project requirements.

- Responsible for data analysis, loading data from Data feed to Data Warehouse tables.

- Redesigning the ETL process from the existing legacy DTS packages into SSIS Packages.

- The SSIS Packages include, a Master Package which executes a number of Child Packages. The packages created include a variety of transformations like Execute SQL Task, Script Task, Execute Package Task, File Connection, Derived Column, and For Each Loop.

- Set package configuration in SSIS to redirect path from test environment to production environment.

- Migrated data from different sources (text based files, Excel spreadsheets, and Access) to SQL Server databases using SQL Server Integration Services (SSIS).

- Modified stored procedures to meet current design and used them in SQL Server.

- Developed queries in Data Warehouse in OLTP/OLAP environment.

- Created complex Stored Procedures, Datasets, Object Data Sources, and SQL Data Sources.

- Modified web pages to do database inserts, deletes, modify.

- Responsible for Full Report Cycle including Authoring, Managing, Security and generation of Reports.

- Responsible for creating Summary reports, Sub reports, Drill Down reports, Matrix reports.

- Created ad-hoc reports using SQL server 2005 Reporting Services (SSRS).

- Created report models from OLTP & OLAP.

- Trained 10 business users so that they can create reports from the report model I created.

- Troubleshooting Database performance issues and implementing necessary database changes.

- Involved in Development, Review and testing of the new system by implementing business rules and transformations.

Environment: SQL Server 2008/2005, T-SQL, DB Artisan, MS Integration, Analysis and Reporting Services, Management Studio, Query Analyzer, Windows 2007 Server, Oracle 9i.

| Life Insurance Corporation of India (LIC) | October 2005 – June 2006 |

| SQL Server Developer/SSIS/SSRS/DBA | Hyderabad, India |

Life Insurance is an OLTP system which stores the customer information regarding members and their payments and policies. The warehouse was running on SQL Server 2000 with a web based Dot Net front-end, Implemented infrastructure to support over 15 database servers consisting of more than 150 databases.

Responsibilities:

- Developing T-SQL queries, triggers, functions, cursors and stored procedures.

- Responsible for logical and physical design of SQL Server databases.

- Alter the schema for the required objects.

- Used DDL and DML for writing triggers, stored procedures, and data manipulation.

- Created views to restrict access to data in a table for security.

- Expert in extracting data using SSIS from OLTP to OLAP.

- Performed Bulk data migration using DTS and SSIS from flat files.

- Designed and optimized all the SSIS packages.

- Created SSIS packages to Export data from text file to SQL Server Database.

- Designed and implemented stylish report layouts.

- Identified the Data Source and defining them to build the Data Source Views.

- Identified and defined the Datasets for report generation and also included report parameters.

- Generated Reports using Global Variables, Expressions and Functions for the reports.

- Implemented full and differential backups and also created and managed DB objects.

- Make recommendations for File group Creation and Placement.

- Scheduling backups and automating weekend maintenance tasks.

- Created users and roles for login authentication and validating permissions.

- Administering MS- SQL Server, performing day-to-day tasks such as Database tuning by checking indexes.

- Upgraded SQL Server 2000 to SQL Server 2005.

- Assisted developers in tuning Stored Procedures, Triggers and Functions over performance issues.

Environment : SQL Server 2005/2000, T-SQL, DB Artisan, MS Reporting Services, Management Studio, Query Analyzer, Windows 2003/2000 Server, Oracle 9i.

| ICICI Bank | June 2004 - September 2005 |

| SQL Server Developer | Hyderabad, India |

ICICI InfoTech Services Limited is an IT solutions provider by consolidating all IT resources within the ICICI Group. ICICI group also offers a full range of financial services products to serve the needs of small and large corporations, government, institutional and individual investors.

Responsibilities:

- Setting up new MS SQL Server instances and databases.

- Going through the clients' requirements and coming up with database design and application architecture. Plan capacity specification requirements for new database servers.

- Created new database objects like Procedures, Functions, Packages, Triggers, Indexes and Views using T-SQL in SQL Server 2000.

- Capacity planning of MS SQL Servers. Implemented and maintained database security.

- Managing logins, roles, assigning rights and permissions. Created views to implement security.

- Worked on Client/Server tools like SQL Server Enterprise Manager and Query Analyzer to administer SQL Server. Used SQL Server System Tables to retrieve metadata.

- Troubleshoot and minimize production down issues.

- Have written several stored procedures and Triggers as per the client requirements.

- Running DBCC consistency checks, and fixing data corruption in application databases.

- Designed new job process, new database maintenance plans and DTS packages.

- Performance tuning of SQL queries and stored procedures using SQL Profiler and Index Tuning Wizard.

- Created scheduled maintenances and backup procedures to the database as a disaster recovery method.

- Data conversions and data loads from various databases and file structures.

- Monitor failed and long running MS SQL Server jobs.

- Monitor, tune, and analyze database performance and allocate server resources to achieve optimum database performance.

Environment: SQL Server 2000, Transact-SQL, Enterprise Manager, Query Analyzer, Oracle 8i, DTS Designer, SQL Profiler, Index Tuning Wizard and MS-ACCESS 2000.

SQL Server Partial Data backup, a new...

SQL Script to get Backup Information ...

SQL Server Partial Data backup, a new backup type in SQL Server 2005

One of my training participant was asked discussing about refresh of large databases where I suggested him a partial backup strategy but I was shocked when he misunderstood the things and understood that partial backup is same filegroup backup.

So let's understand,

What is SQL Server Partial Database Backup ?

Partial backups were introduced for SQL Server 2005. Partial backups will backup the primary filegroup, any read/write file groups, and any read-only files that are specified during the backup. The partial backup is designed to not backup any data that is placed in a read-only file group. So, you can use a partial backup to backup your entire database – except for the read only data. Partial backups are meant to be used in a simple recovery model situation, although they can be used in a full recovery model situation as well.

Data Loss:

The partial backup provides bare minimum protection from data loss. The partial backup serves as a base for differential partial backups – which can be applied to the partial backup to bring the database closer to a point in time near the actual database failure. A full recovery model with transaction logs provides the best chance to restore the database up to the point of failure.

Size:

A key advantage of using a partial backup as opposed to a full database backup is the reduced file size. The read only filegroups only have to be saved once, therefore the partial backups will be smaller than what would be required for a full database backup.

Resources Required:

The strain on the database will likely be much less for a partial database backup than it is for a full database backup since specified read only filegroups won’t have to be backed up.

How to take a Partial Database Backup ?

Backup Type Partial and Differential partial are newly introduced in SQL Server 2005 and still not supported by SSMS and Maintenance Plans. To take this sort of backup we need to manually write SQL statement like below

BACKUP DATABASE [ANKUR] READ_WRITE_FILEGROUPS TO DISK = N'c:\ANKUR-25Sep2011.BAK' -- Full Partial backup

BACKUP DATABASE [ANKUR] READ_WRITE_FILEGROUPS TO DISK = N'c:\ANKUR-25Sep2011.BAK' WITH DIFFERENTIAL -- Differential Partial backup

For More Details check BOL.

SQL Script for getting Backup Information

SQL Script to know when all was last backup was being performed ?

SQL Script to know when all was last backup was being performed and what type of backup was taken ?

SQL Script to what type of backup is being configured and when it was last run?

Backuping all databases is the most important maintenance part and a DBA should know, what type of backup and when was it taken last time.

SQL Script to get last backup date and time and type of backup

/*-------------------------------------------------------------------------------------------------------------------------------Description : This stored procedure will send out alert email if there is a blocking which lasted more than specified duration)

-- Copyright 2011 - DBATAG

-- Author : DBATAG

-- Created on : 20/09/2011

-- Version : 1.0

-- Dependencies :

-- Table Procedure

-- Description : Get backup date and time of last backup and type of backup and till now, how many backups has been performed on aparticular DB.

----------------------------------------------------------------------------------------------------------------------------*/

SELECT sDB.name AS DatabaseName

,MAX(sDB.recovery_model_desc) AS RecModel

,MAX(BS.backup_start_date) AS LastBackup

,MAX(CASE WHEN BS.type = 'D'

THEN BS.backup_start_date END)

AS LastFull

,SUM(CASE WHEN BS.type = 'D'

THEN 1 END)

AS CountFull

,MAX(CASE WHEN BS.type = 'L'

THEN BS.backup_start_date END)

AS LastLog

,SUM(CASE WHEN BS.type = 'L'

THEN 1 END)

AS CountLog

,MAX(CASE WHEN BS.type = 'I'

THEN BS.backup_start_date END)

AS LastDiff

,SUM(CASE WHEN BS.type = 'I'

THEN 1 END)

AS CountDiff

,MAX(CASE WHEN BS.type = 'F'

THEN BS.backup_start_date END)

AS LastFile

,SUM(CASE WHEN BS.type = 'F'

THEN 1 END)

AS CountFile

,MAX(CASE WHEN BS.type = 'G'

THEN BS.backup_start_date END)

AS LastFileDiff

,SUM(CASE WHEN BS.type = 'G'

THEN 1 END)

AS CountFileDiff

,MAX(CASE WHEN BS.type = 'P'

THEN BS.backup_start_date END)

AS LastPart

,SUM(CASE WHEN BS.type = 'P'

THEN 1 END)

AS CountPart

,MAX(CASE WHEN BS.type = 'Q'

THEN BS.backup_start_date END)

AS LastPartDiff

,SUM(CASE WHEN BS.type = 'Q'

THEN 1 END)

AS CountPartDiff

FROM sys.databases AS sDB

LEFT JOIN

msdb.dbo.backupset AS BS

ON BS.database_name = sDB.name

WHERE ISNULL(BS.is_damaged, 0) = 0 -- exclude damaged backups

GROUP BY sDB.name

ORDER BY sDB.name;

Note : In backupset table, we get the following backups status which means

- D = Database

- I = Differential database

- L = Log

- F = File or filegroup

- G =Differential file

- P = Partial

- Q = Differential partial

- NULL - not specified

Backup Type Partial and Differential partial are newly introduced in SQL Server 2005 and still not supported by SSMS and Maintenance Plans. To take this sort of backup we need to manually write SQL statement like below

BACKUP DATABASE [ANKUR] READ_WRITE_FILEGROUPS TO DISK = N'c:\ANKUR-25Sep2011.BAK' -- Full Partial backup

BACKUP DATABASE [ANKUR] READ_WRITE_FILEGROUPS TO DISK = N'c:\ANKUR-25Sep2011.BAK' WITH DIFFERENTIAL -- Differential Partial backup